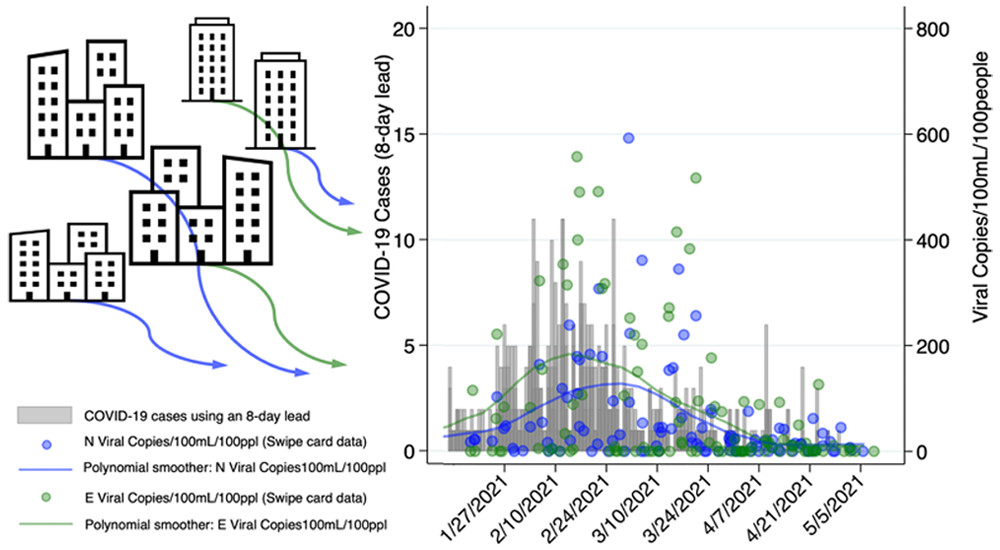

Subsewershed analyses of the impacts of inflow and infiltration on viral pathogens and antibiotic resistance markers across a rural sewer system

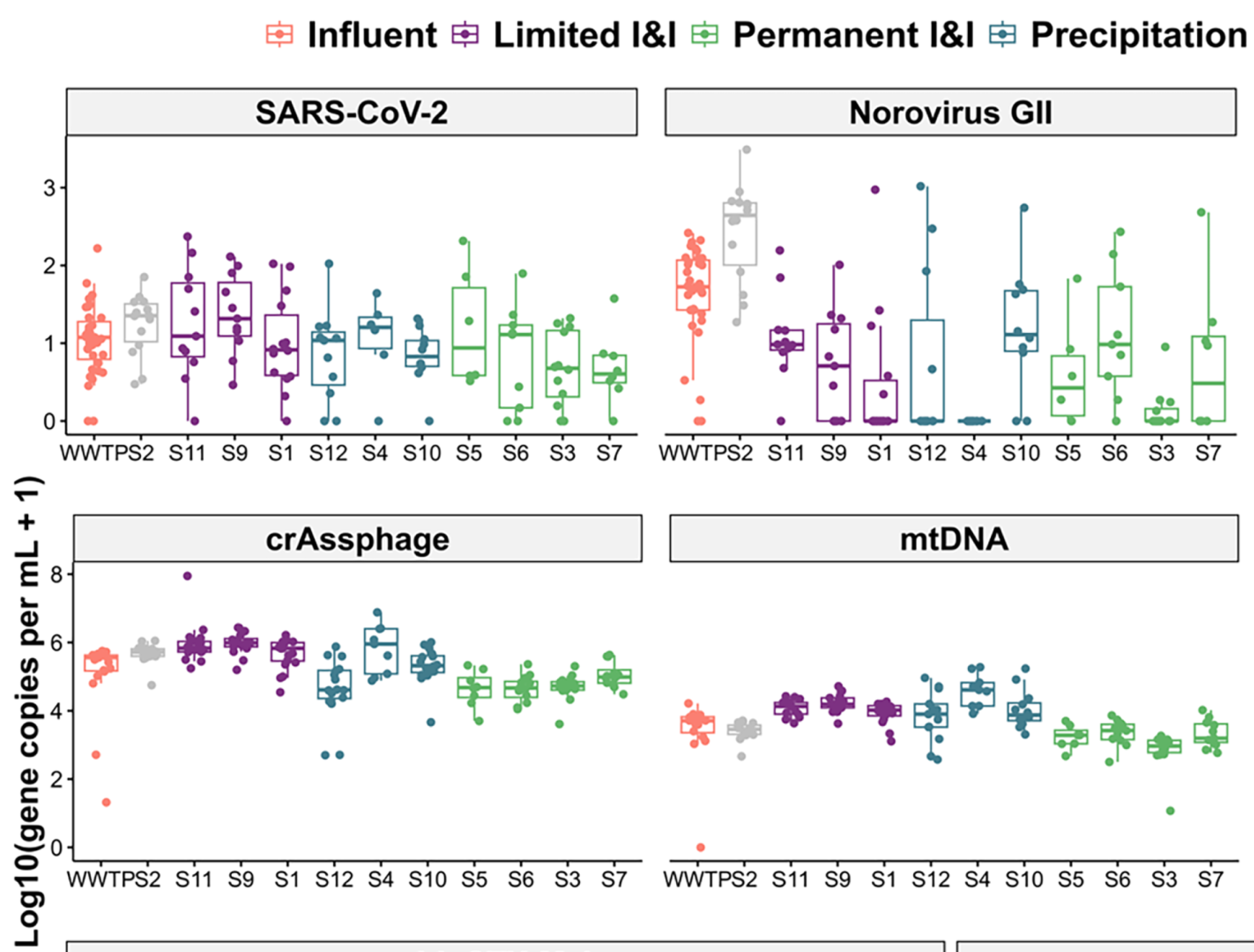

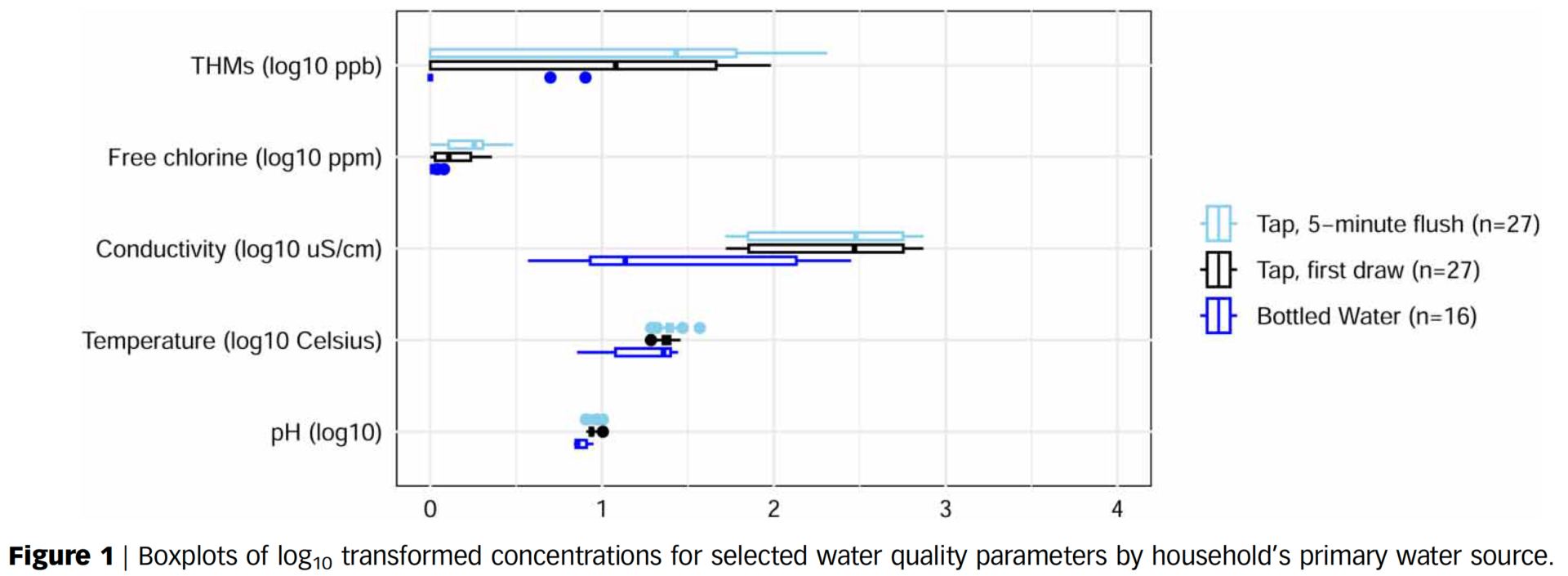

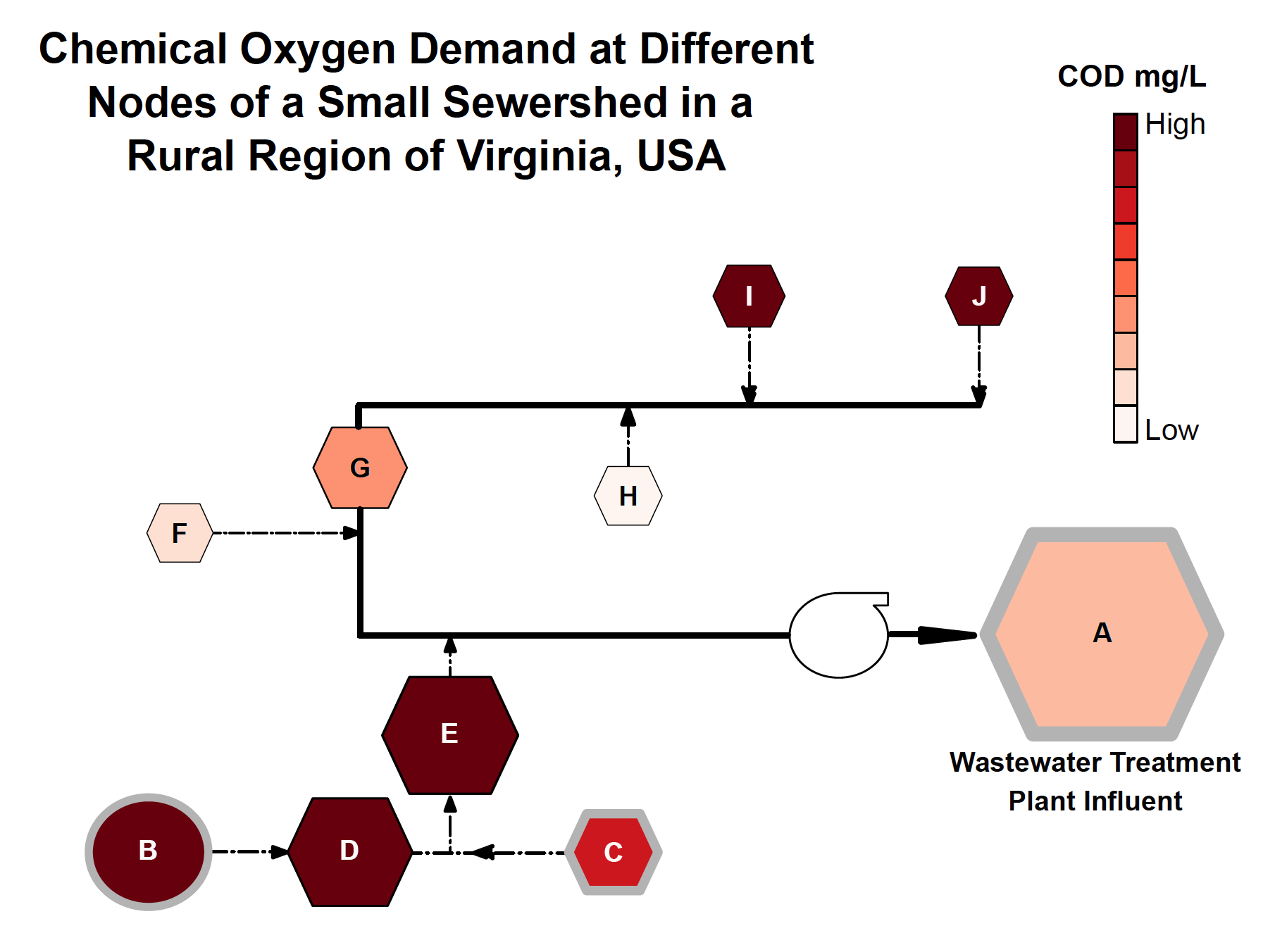

Abstract/Summary: As wastewater-based surveillance (WBS) is increasingly used to track community-level disease trends, it is important to understand how pathogen signals can be altered by phenomena that occur within sewersheds such as inflow and infiltration (I&I). Our objectives were to characterize I&I across a rural sewershed and assess potential impacts on viral (rotavirus, norovirus GII, and SARS-CoV-2), fecal indicator (HF183, the hCYTB484 gene specific to the human mitochondrial genome, and crAssphage), and antimicrobial resistance (intI1, blaCTX−M-1) targets. In a small town in Virginia (USA), we collected 107 wastewater samples at monthly intervals over a 12-month period (2022–2023) at the wastewater treatment plant (WWTP) influent and 11 up-sewer sites. Viral, fecal indicator, and antimicrobial resistance targets were enumerated using ddPCR. We observed the highest concentrations of human fecal markers and a measure anthropogenic pollution and antibiotic resistance (intI1) in up-sewer sites with limited I&I. However, median viral gene copy concentrations were highest at the WWTP, compared to 100 % (n = 11), 90 % (n = 10), and 55 % (n = 6) of up-sewer sites for rotavirus, norovirus GII, and SARS-CoV-2, respectively. After adjusting for covariates (Ba, COD, dissolved oxygen, groundwater depth, precipitation, sampling date) using generalized linear models, moderate to high I&I was associated with statistically significant reductions in log10-transformed rotavirus and norovirus GII concentrations across the sewershed (coefficients = -0.7 and -0.9, p < 0.001, n = 95), though not for SARS-CoV-2 (coefficient = -0.2, p = 0.181, n = 95). Overall, we found that while I&I can diminish biomarker signals throughout a sewershed, including at the WWTP influent, I&I impacts vary depending on the target, and pathogen biomarker signals were, on average, higher and less variable over time at the WWTP compared to most up-sewer sites. As far as we are aware, this is the first study to assess in situ I&I impacts on multiple WBS targets. Taken together, our findings highlight challenges and tradeoffs associated with different sampling strategies for different WBS targets in heavily I&I impacted systems.